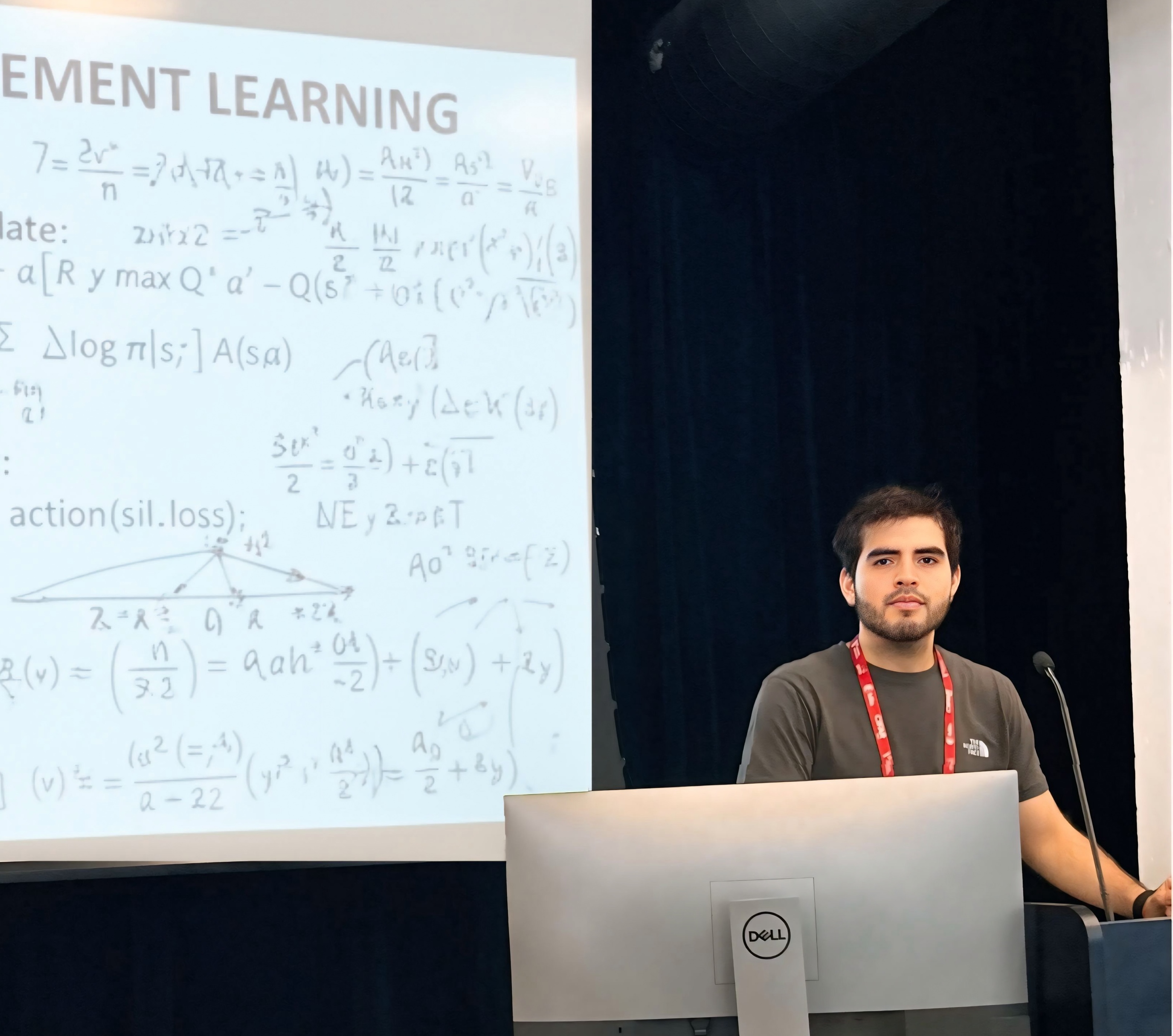

Hi! I’m a PhD student in Computer Science at the University of Castilla–La Mancha, Spain. I’m fascinated by how artificial agents can learn, adapt, and understand others — a bit like us. My research explores Theory of Mind, Multi-agent Systems, and Reinforcement Learning, guided by the question: what does it mean for AI to think socially?

I recently spent time at Umeå University (Sweden) working with Frank Dignum, and attended an DLRL Summer School at Mila (Canada) — home to Yoshua Bengio and an inspiring community of researchers. I love connecting ideas from cognitive science and machine learning to imagine AI systems that not only act intelligently, but empathetically.

Education

-

University of Castilla-La ManchaDepartment of Computer Systems

University of Castilla-La ManchaDepartment of Computer Systems

Ph.D. Student in Computer ScienceJan. 2022 - present -

Polytechnic University of MadridE.T.S. School of Computer Engineering

Polytechnic University of MadridE.T.S. School of Computer Engineering

M.Sc. in Artificial IntelligenceSep. 2020 - Jul. 2021 -

Yachay Tech UniversitySchool of Mathematical and Computer Science

Yachay Tech UniversitySchool of Mathematical and Computer Science

B.S. in Computer ScienceApr. 2015 - Mar. 2020

Experience

-

Umeå UniversityVisiting ResearcherJan. 2025 - Apr. 2025

Umeå UniversityVisiting ResearcherJan. 2025 - Apr. 2025 -

Mila - Quebec AI InstituteSummer School AssistantJul. 2023

Mila - Quebec AI InstituteSummer School AssistantJul. 2023 -

Yachay Tech UniversityVisiting ResearcherSep. 2023 - Oct. 2023

Yachay Tech UniversityVisiting ResearcherSep. 2023 - Oct. 2023

Honors & Awards

-

Best Paper in the ICT Applications. TICEC 20232023

-

Inclusive AI Scholarship. CIFAR-Canada2023

-

Doctoral Fellowship (FPI, Spanish Ministry of Science and Innovation)2022

-

Award for Scientific Merit. Municipality of La Maná, Ecuador2021

News

Selected Publications (6/37) (view all )

Theory of Mind and Continual Reinforcement Learning for Bullying Intervention

Luis Zhinin-Vera, José J. González-García, Víctor López-Jaquero, Elena Navarro, Pascual González

Neural Computing and Applications. 2025 Accepted, waiting publication

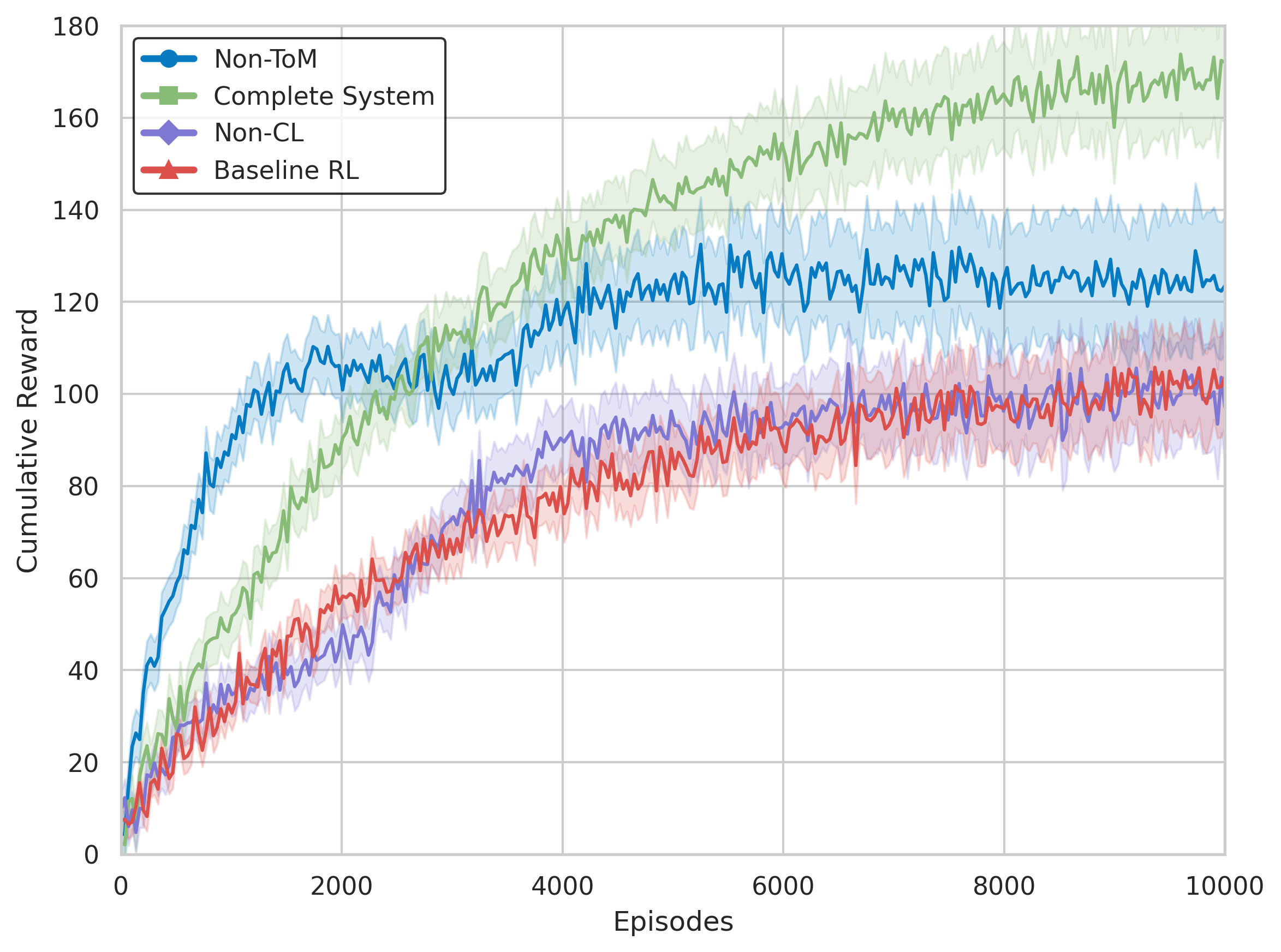

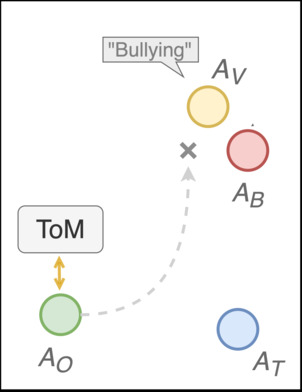

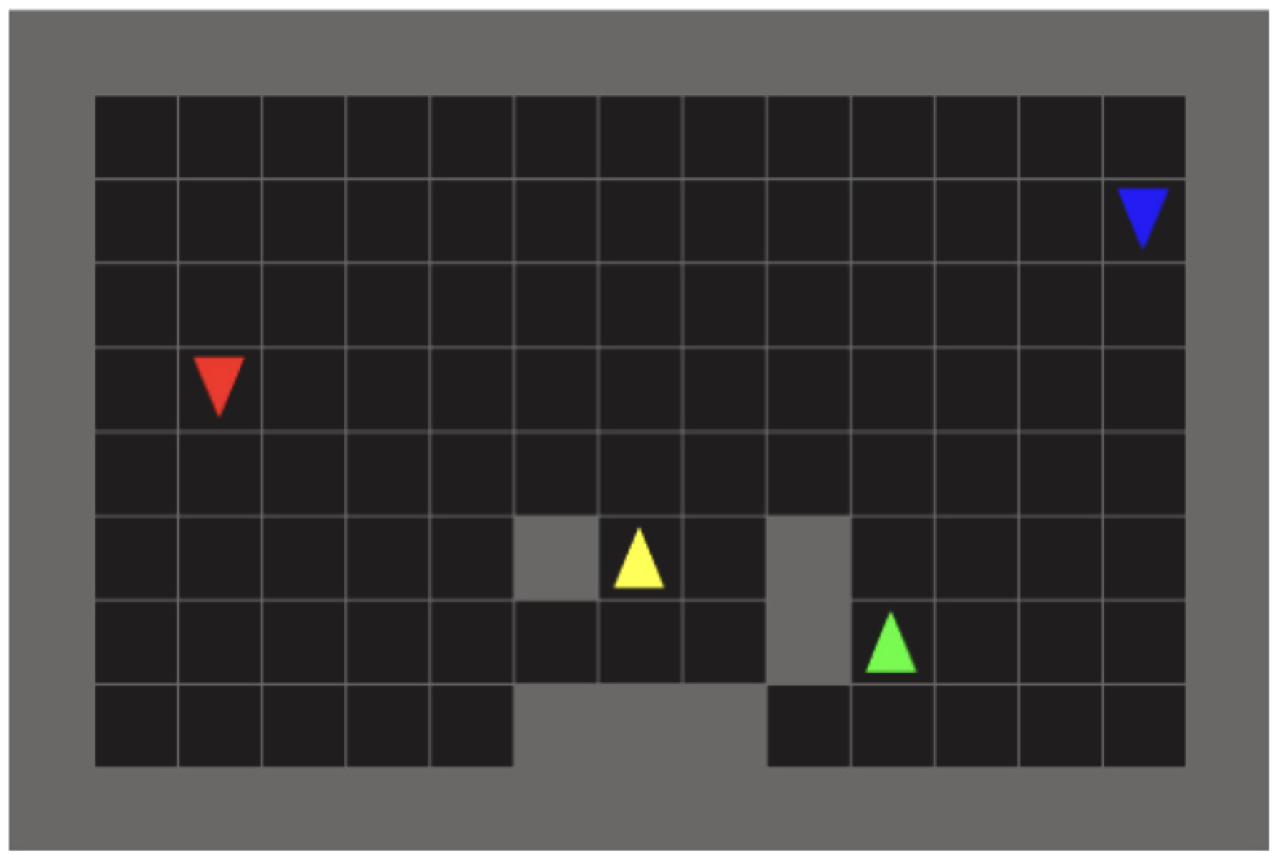

The proposed approach incorporates abstraction mechanisms derived from Theory-Theory (TT) and Simulation Theory (ST), enabling agents to reason about bullying interactions either through predefined rules or simulated experiences. The system has been evaluated in a simulated school environment with varying levels of bullying severity, demonstrating its effectiveness in dynamically adapting intervention strategies. The results indicate that combining ToM, RL, and CL leads to superior performance compared to standard RL-based approaches, particularly in high-risk bullying scenarios. This work provides a foundation for the development of socially intelligent AI systems capable of proactive and context-sensitive intervention in educational settings.

Theory of Mind and Continual Reinforcement Learning for Bullying Intervention

Luis Zhinin-Vera, José J. González-García, Víctor López-Jaquero, Elena Navarro, Pascual González

Neural Computing and Applications. 2025 Accepted, waiting publication

The proposed approach incorporates abstraction mechanisms derived from Theory-Theory (TT) and Simulation Theory (ST), enabling agents to reason about bullying interactions either through predefined rules or simulated experiences. The system has been evaluated in a simulated school environment with varying levels of bullying severity, demonstrating its effectiveness in dynamically adapting intervention strategies. The results indicate that combining ToM, RL, and CL leads to superior performance compared to standard RL-based approaches, particularly in high-risk bullying scenarios. This work provides a foundation for the development of socially intelligent AI systems capable of proactive and context-sensitive intervention in educational settings.

Multi-Agent Systems for Bullying Intervention

Luis Zhinin-Vera, José J. González-García, Víctor López-Jaquero, Elena Navarro, Pascual González

AAMAS '25: Proceedings of the 24th International Conference on Autonomous Agents and Multiagent System 2025 iCORE A*

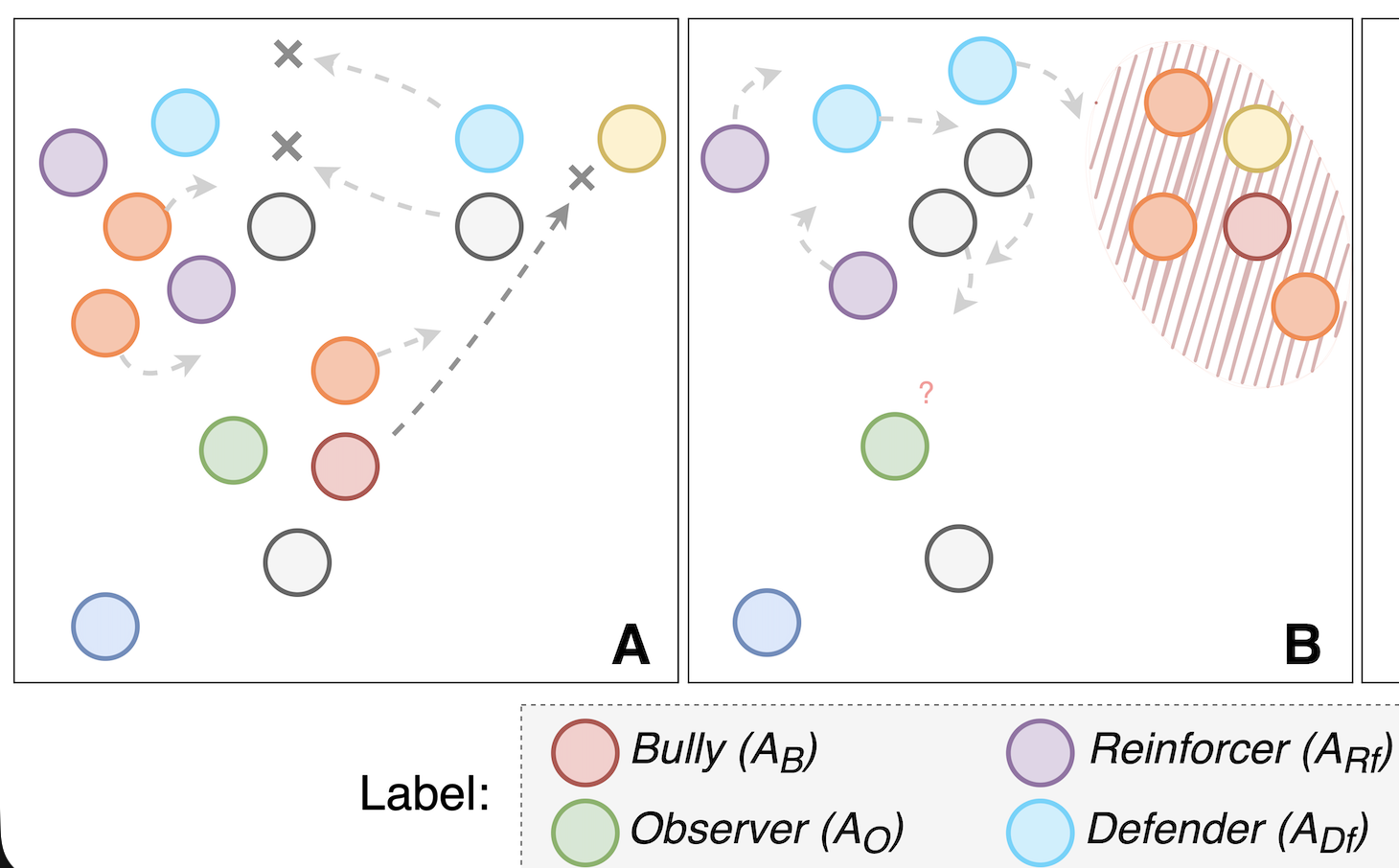

Our approach leverages ToM to allow agents to infer the mental states of others, enabling context-aware decision-making for effective intervention strategies. RL is used to allow the observer agent to learn from past interactions, improving its ability to recognize bullying behaviors and refine its responses. CL ensures the system can adapt to new behaviors and evolving environments, maintaining its effectiveness over time. We present abstraction mechanisms based on Theory-Theory and Simulation Theory, which allow the system to reason about complex social interactions either through predefined rules or simulations. This paper outlines the theoretical framework and design of the proposed algorithm, offering a responsive, flexible, adaptive, and capable solution for bullying prevention and intervention in educational contexts, where socially intelligent systems can play a key role in creating safer environments.

Multi-Agent Systems for Bullying Intervention

Luis Zhinin-Vera, José J. González-García, Víctor López-Jaquero, Elena Navarro, Pascual González

AAMAS '25: Proceedings of the 24th International Conference on Autonomous Agents and Multiagent System 2025 iCORE A*

Our approach leverages ToM to allow agents to infer the mental states of others, enabling context-aware decision-making for effective intervention strategies. RL is used to allow the observer agent to learn from past interactions, improving its ability to recognize bullying behaviors and refine its responses. CL ensures the system can adapt to new behaviors and evolving environments, maintaining its effectiveness over time. We present abstraction mechanisms based on Theory-Theory and Simulation Theory, which allow the system to reason about complex social interactions either through predefined rules or simulations. This paper outlines the theoretical framework and design of the proposed algorithm, offering a responsive, flexible, adaptive, and capable solution for bullying prevention and intervention in educational contexts, where socially intelligent systems can play a key role in creating safer environments.

Mindful Human Digital Twins: Integrating Theory of Mind with multi-agent reinforcement learning

Luis Zhinin-Vera, Elena Pretel, Víctor López-Jaquero, Elena Navarro, Pascual González

Applied Soft Computing 2025 Open Access

In this paper, we propose a novel approach that leverages Theory-Theory (TT) and Simulation-Theory (ST) to enhance ToM within the MARL framework. Building on the Digital Twins (DT) framework, we introduce the Mindful Human Digital Twin (MHDT). These intelligent systems enriched with ToM capabilities bridge the gap between artificial agents and human-like interactions. In this work, we utilized OpenAI Gymnasium to perform simulations and evaluate the effectiveness of our approach. This work represents a significant step forward in Artificial Intelligence (AI), resulting in socially intelligent systems capable of natural and intuitive interactions with both their environment and other agents. This approach is particularly effective in addressing critical social challenges such as school bullying. This research not only advances the growing field of MARL but also paves the way for sophisticated AI systems with enhanced ToM abilities, tailored for complex and sensitive real-world applications.

Mindful Human Digital Twins: Integrating Theory of Mind with multi-agent reinforcement learning

Luis Zhinin-Vera, Elena Pretel, Víctor López-Jaquero, Elena Navarro, Pascual González

Applied Soft Computing 2025 Open Access

In this paper, we propose a novel approach that leverages Theory-Theory (TT) and Simulation-Theory (ST) to enhance ToM within the MARL framework. Building on the Digital Twins (DT) framework, we introduce the Mindful Human Digital Twin (MHDT). These intelligent systems enriched with ToM capabilities bridge the gap between artificial agents and human-like interactions. In this work, we utilized OpenAI Gymnasium to perform simulations and evaluate the effectiveness of our approach. This work represents a significant step forward in Artificial Intelligence (AI), resulting in socially intelligent systems capable of natural and intuitive interactions with both their environment and other agents. This approach is particularly effective in addressing critical social challenges such as school bullying. This research not only advances the growing field of MARL but also paves the way for sophisticated AI systems with enhanced ToM abilities, tailored for complex and sensitive real-world applications.

Integrating Theory of Mind, Reinforcement Learning, and Continual Learning

Luis Zhinin-Vera, Víctor López-Jaquero, Elena Navarro, Pascual González, Frank Dignum

Submitted to Journal of Artificial Intelligence 2025 Submitted

We propose an integrative multi-agent architecture that combines \textit{Theory of Mind} (ToM), \textit{Reinforcement Learning} (RL), and \textit{Continual Learning} (CL). Each agent operates under role-specific decision architectures and social constraints. The observer agent employs ToM-based reasoning to infer others’ intentions and goals, guiding interventions consistent with social norms. A risk-sensitive behavioral engine regulates interactions by dynamically adapting roles, rules, and available actions across different risk conditions. Experimental simulations across low-, moderate-, and high-risk environments demonstrate that the proposed system effectively fosters adaptive and norm-consistent interventions. Agents exhibit emergent behavioral role transitions—particularly among initially passive bystanders—and maintain long-term decision performance under evolving social dynamics. Quantitative metrics, including Observer Decision Accuracy (ODA), Social Role Transition Rate (SRTR), Intervention Success Rate (ISR), and Average Episode Reward (AER), confirm the robustness and adaptability of the proposed framework.

Integrating Theory of Mind, Reinforcement Learning, and Continual Learning

Luis Zhinin-Vera, Víctor López-Jaquero, Elena Navarro, Pascual González, Frank Dignum

Submitted to Journal of Artificial Intelligence 2025 Submitted

We propose an integrative multi-agent architecture that combines \textit{Theory of Mind} (ToM), \textit{Reinforcement Learning} (RL), and \textit{Continual Learning} (CL). Each agent operates under role-specific decision architectures and social constraints. The observer agent employs ToM-based reasoning to infer others’ intentions and goals, guiding interventions consistent with social norms. A risk-sensitive behavioral engine regulates interactions by dynamically adapting roles, rules, and available actions across different risk conditions. Experimental simulations across low-, moderate-, and high-risk environments demonstrate that the proposed system effectively fosters adaptive and norm-consistent interventions. Agents exhibit emergent behavioral role transitions—particularly among initially passive bystanders—and maintain long-term decision performance under evolving social dynamics. Quantitative metrics, including Observer Decision Accuracy (ODA), Social Role Transition Rate (SRTR), Intervention Success Rate (ISR), and Average Episode Reward (AER), confirm the robustness and adaptability of the proposed framework.

Continual learning, deep reinforcement learning, and microcircuits: a novel method for clever game playing

Oscar Chang, Leo Ramos, Manuel Eugenio Morocho-Cayamcela, Rolando Armas, Luis Zhinin-Vera

Multimedia Tools and Applications 2024 Open Access

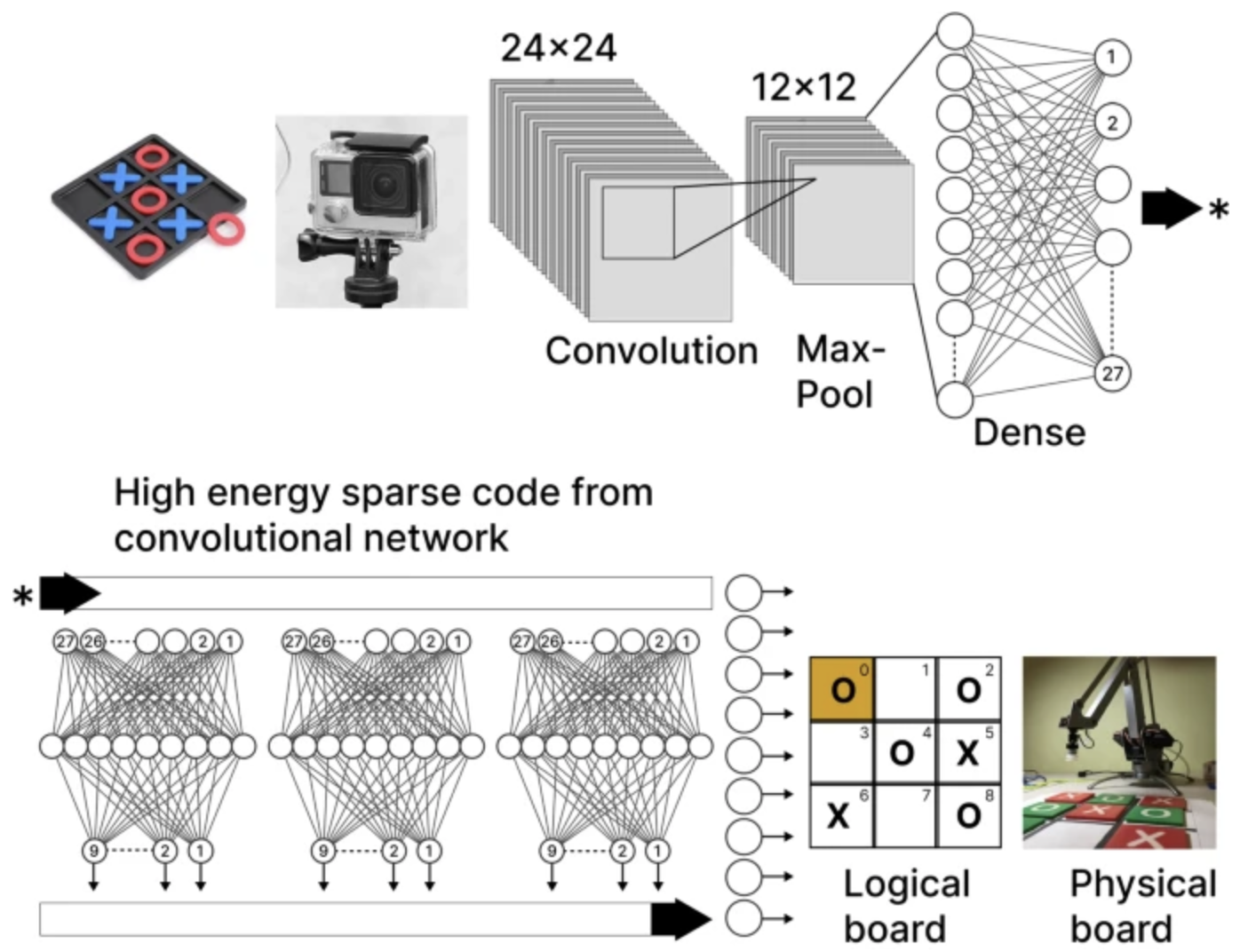

Contemporary neural networks frequently encounter the challenge of catastrophic forgetting, wherein newly acquired learning can overwrite and erase previously learned information. The paradigm of continual learning offers a promising solution by enabling intelligent systems to retain and build upon their acquired knowledge over time. This paper introduces a novel approach within the continual learning framework, employing deep reinforcement learning agents that process unprocessed pixel data and interact with microcircuit-like components. These agents autonomously advance through a series of learning stages, culminating in the development of a sophisticated neural network system optimized for predictive performance in the game of tic-tac-toe. Structured to operate in sequential order, each agent is tasked with achieving forward-looking objectives based on Bellman’s principles of reinforcement learning. Knowledge retention is facilitated through the integration of specific microcircuits, which securely store the insights gained by each agent. During the training phase, these microcircuits work in concert, employing high-energy, sparse encoding techniques to enhance learning efficiency and effectiveness. The core contribution of this paper is the establishment of an artificial neural network system capable of accurately predicting tic-tac-toe moves, akin to the observational strategies employed by humans. Our experimental results demonstrate that after approximately 5000 cycles of backpropagation, the system significantly reduced the training loss to , thereby increasing the expected cumulative reward. This advancement in training efficiency translates into superior predictive capabilities, enabling the system to secure consistent victories by anticipating up to four moves ahead.

Continual learning, deep reinforcement learning, and microcircuits: a novel method for clever game playing

Oscar Chang, Leo Ramos, Manuel Eugenio Morocho-Cayamcela, Rolando Armas, Luis Zhinin-Vera

Multimedia Tools and Applications 2024 Open Access

Contemporary neural networks frequently encounter the challenge of catastrophic forgetting, wherein newly acquired learning can overwrite and erase previously learned information. The paradigm of continual learning offers a promising solution by enabling intelligent systems to retain and build upon their acquired knowledge over time. This paper introduces a novel approach within the continual learning framework, employing deep reinforcement learning agents that process unprocessed pixel data and interact with microcircuit-like components. These agents autonomously advance through a series of learning stages, culminating in the development of a sophisticated neural network system optimized for predictive performance in the game of tic-tac-toe. Structured to operate in sequential order, each agent is tasked with achieving forward-looking objectives based on Bellman’s principles of reinforcement learning. Knowledge retention is facilitated through the integration of specific microcircuits, which securely store the insights gained by each agent. During the training phase, these microcircuits work in concert, employing high-energy, sparse encoding techniques to enhance learning efficiency and effectiveness. The core contribution of this paper is the establishment of an artificial neural network system capable of accurately predicting tic-tac-toe moves, akin to the observational strategies employed by humans. Our experimental results demonstrate that after approximately 5000 cycles of backpropagation, the system significantly reduced the training loss to , thereby increasing the expected cumulative reward. This advancement in training efficiency translates into superior predictive capabilities, enabling the system to secure consistent victories by anticipating up to four moves ahead.

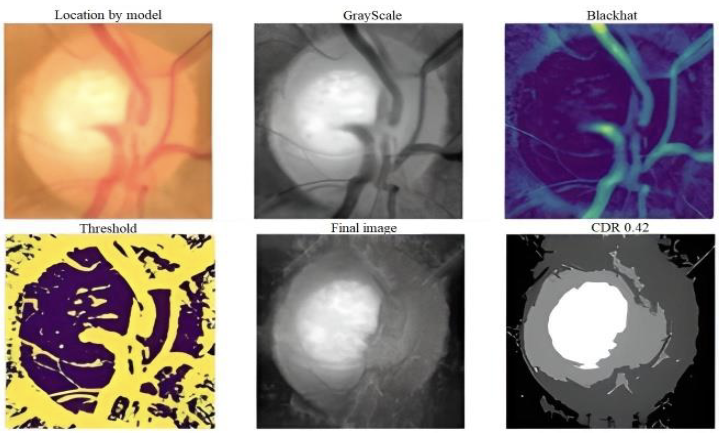

Deep Learning for Glaucoma Detection: R-CNN ResNet-50 and Image Segmentation

Marlene S. Puchaicela-Lozano, Luis Zhinin-Vera, Ana J. Andrade-Reyes, Dayanna M. Baque-Arteaga, Carolina Cadena-Morejón, Andrés Tirado-Espín, Lenin Ramírez-Cando, Diego Almeida-Galárraga, Jonathan Cruz-Varela, Fernando Villalba Meneses

Journal of Advances in Information Technology (JAIT) 2023 Open Access

Glaucoma is a leading cause of irreversible blindness worldwide, affecting millions of people. Early diagnosis is essential to reduce visual loss, and various techniques are used for glaucoma detection. In this work, a hybrid method for glaucoma fundus image localization using pre-trained Region-based Convolutional Neural Networks (R-CNN) ResNet-50 and cup-to-disk area segmentation is proposed. The ACRIMA and ORIGA databases were used to evaluate the proposed approach. The results showed an average confidence of 0.879 for the ResNet-50 model, indicating it as a reliable alternative for glaucoma detection. Moreover, the cup-to-disc ratio was calculated using Gradient-color-based optic disc segmentation, coinciding with the ResNet-50 results in 80% of cases, having an average confidence score of 0.84. The approach suggested in this study can determine if glaucoma is present or not, with a final accuracy of 95% with specific criteria provided to guide the specialist for an accurate diagnosis. In summary, the proposed model provides a reliable and secure method for diagnosing glaucoma using fundus images.

Deep Learning for Glaucoma Detection: R-CNN ResNet-50 and Image Segmentation

Marlene S. Puchaicela-Lozano, Luis Zhinin-Vera, Ana J. Andrade-Reyes, Dayanna M. Baque-Arteaga, Carolina Cadena-Morejón, Andrés Tirado-Espín, Lenin Ramírez-Cando, Diego Almeida-Galárraga, Jonathan Cruz-Varela, Fernando Villalba Meneses

Journal of Advances in Information Technology (JAIT) 2023 Open Access

Glaucoma is a leading cause of irreversible blindness worldwide, affecting millions of people. Early diagnosis is essential to reduce visual loss, and various techniques are used for glaucoma detection. In this work, a hybrid method for glaucoma fundus image localization using pre-trained Region-based Convolutional Neural Networks (R-CNN) ResNet-50 and cup-to-disk area segmentation is proposed. The ACRIMA and ORIGA databases were used to evaluate the proposed approach. The results showed an average confidence of 0.879 for the ResNet-50 model, indicating it as a reliable alternative for glaucoma detection. Moreover, the cup-to-disc ratio was calculated using Gradient-color-based optic disc segmentation, coinciding with the ResNet-50 results in 80% of cases, having an average confidence score of 0.84. The approach suggested in this study can determine if glaucoma is present or not, with a final accuracy of 95% with specific criteria provided to guide the specialist for an accurate diagnosis. In summary, the proposed model provides a reliable and secure method for diagnosing glaucoma using fundus images.